1

Introduction

1.1

This Prudential Regulation Authority (PRA) supervisory statement (SS) sets out the PRA’s expectations for banks’1 model risk management (MRM). The PRA considers model risk as a risk in its own right.

Footnotes

- 1. While the scope of this SS includes banks, building societies, and designated investment firms, the term ‘banks’ is used in the title to make it clear that the expectations do not apply to insurers or reinsurers.

- 17/05/2024

1.2

This SS is relevant to all regulated United Kingdom (UK)-incorporated banks, building societies and PRA-designated investment firms with internal model approval to calculate regulatory capital requirements.2 The expectations in this SS do not apply to firms which do not have permission to use internal models to calculate regulatory capital and third-country firms operating in the UK through a branch. However, the PRA considers that those firms may find the proposed principles useful, and are welcome to consider them to manage model risk within their firm. Credit unions, insurers, and reinsurers are not in scope of the MRM expectations in this supervisory statement.

Footnotes

- 2. These are firms with approval to use internally developed models to calculate regulatory capital requirements for credit risk (Internal Ratings Based approaches), market risk (Internal Model Approach) or counterparty credit risk (Internal Model Method).

- 17/05/2024

1.3

In the rest of this SS, ‘firms’ means UK banks, building societies and PRA-designated investment firms with internal model approval. The purpose of this SS is to support firms to strengthen their policies, procedures, and practices to identify, manage, and control the risks associated with the use of all models, developed in-house or externally, including vendor models,3 and models used for financial reporting purposes. The principles are designed to complement, not supersede, existing supervisory expectations that have been published for selected model types. Firms should continue to apply the supervisory expectations relevant to them and their particular models, including attestations and self-assessments where applicable.

Footnotes

- 3. Vendors and external consultants may find this supervisory statement useful as it sets out the PRA's minimum expectations for firms’ own MRM frameworks.

- 17/05/2024

1.4

The SS is structured around five high-level principles designed to cover all elements of the model lifecycle. The principles set out what the PRA considers to be the core disciplines necessary for a robust MRM framework to manage model risk effectively across all model and risk types. The PRA’s desired outcome is that firms take a strategic approach to MRM as a risk discipline in its own right.

- 17/05/2024

Implementation and self-assessments

1.5

The policy comes into effect on Friday 17 May 2024.

- 17/05/2024

1.6

Before the policy comes into effect, firms are expected to conduct an initial self-assessment of their implemented MRM frameworks against these principles and, where relevant, to prepare remediation plans to address any identified shortcomings.

- 17/05/2024

1.7

Self-assessments should be updated at least annually thereafter, and any remediation plans should be reviewed and updated on a regular basis. Both the findings from the self-assessment and remediation plans should be documented and shared with firms’ boards in a timely manner. Firms’ boards should be updated regularly on remediation progress.

- 17/05/2024

1.8

The relevant SMF(s) accountable for overall MRM should be responsible for ensuring that remediation plans are put in place where necessary with clear ownership of any necessary actions. Firms are not expected to share the remediation plans or self-assessment routinely with the PRA, but should be able to provide them upon request.

- 17/05/2024

1.9

Firms that first receive permission to use an internal model to calculate regulatory capital after the publication of this policy will have 12 months from the grant of that permission to comply with the expectations in this policy.

- 17/05/2024

2

Background

The use of models

2.1

Firms’ use of models4 covers a wide range of areas relevant to its business decision making, risk management, and reporting. Business decisions should be understood here as all decisions made in relation to the general business and operational banking activities, strategic decisions, financial, risk, capital, and liquidity measurement and reporting, and any other decisions relevant to the safety and soundness of firms. Firms' increasing reliance on models and scenario analysis to assess future risks and the evolution of sophisticated modelling techniques highlights the need for sound model governance and effective MRM practices. Inadequate or flawed design and implementation, and inappropriate use of models could lead to adverse consequences.

Footnotes

- 4. Model use is defined here as using a model’s output as a basis for informing business decisions.

- 17/05/2024

Quantitative methods and models

2.2

A wide variety of quantitative calculation methods, systems, approaches, end-user computing (EUCs) applications and calculators (hereinafter collectively ‘quantitative methods’) are often used in firms’ daily operations, ie output supports decisions made in relation to the general business activities, strategic decisions, pricing, financial, risk, capital and liquidity management or reports, and other operational banking activities. Good risk management practices involve quantitative methods that support business decisions being tested for correct implementation and use.

- 17/05/2024

2.3

Models are a subset of quantitative methods. The output of models are estimates, forecasts, predictions, or projections, which themselves could be the input data or parameters of other quantitative methods or models. Model outputs are inherently uncertain, because they are imperfect representations of real-world phenomena, are simplifications of complex real-world systems and processes (often intentionally), and based on a limited set of observations. In addition to testing for correct implementation, for models, good risk management practices involve:

- i. the applicability to the decisions they support being verified; and

- ii. the validity of the underlying model assumptions in the business context of the decisions being assessed.

- 17/05/2024

2.4

For the purposes of the expectations contained within this SS, a model is defined as a quantitative method that applies statistical, economic, financial, or mathematical theories, techniques, and assumptions to process input data into output. Input data can be quantitative and/or qualitative in nature or expert judgement-based and the output can be quantitative or qualitative. A working definition of a model is important as it brings consistency and clarity for firms implementing MRM frameworks.

- 17/05/2024

2.5

However, advances in technology and data processing power increasingly enable deterministic quantitative methods such as decision-based rules or algorithms to become vastly more complex and statistically orientated, conditions that would typically entail challenge to their applicability in supporting important business decisions. Understanding the potential impact the use of models and complex quantitative methods could have on firms’ business and safety and soundness is therefore equally important.

- 17/05/2024

Model risk

2.6

Model risk is the potential for adverse consequences from model errors or the inappropriate use of modelled outputs to inform business decisions. These adverse consequences could lead to a deterioration in the prudential position, non-compliance with applicable laws and/or regulations, or damage to a firm’s reputation. Model risk can also lead to financial loss, as well as qualitative limitations such as the imposition of restrictions on business activities.

- 17/05/2024

2.7

Models’ outputs may be affected by the choice and suitability of the methodology, the quality and relevance of the data inputs, and the integrity of implementation and ongoing scope of applicability of a model. The continued suitability of the model may also be impacted by changes to the validity of any assumptions supporting the model’s use case (eg the macroeconomic assumptions encoded in the model, or assumptions about the continued relationship between variables in historical data) or inappropriate use.

- 17/05/2024

2.8

Individual model risk increases with model complexity. For example, the PRA would expect higher model risk for more complex models that are difficult to understand or explain in non-technical terms, or for which it is difficult to anticipate the model output given the input. Similarly, higher uncertainty in relation to inputs and construct, eg complex data structures, low quality or unstructured data would increase model risk, including models where the results and findings cannot be easily repeated or reproduced. Overall (aggregate) model risk increases with larger numbers of inter-related models and interconnected data structures and data sources.

- 17/05/2024

Model risk management and the model lifecycle

2.9

Model risk can be reduced or mitigated, but not entirely eliminated, through an effective MRM framework. Effective MRM starts with a comprehensive governance and oversight framework supported by effective model lifecycle management.

- 17/05/2024

2.10

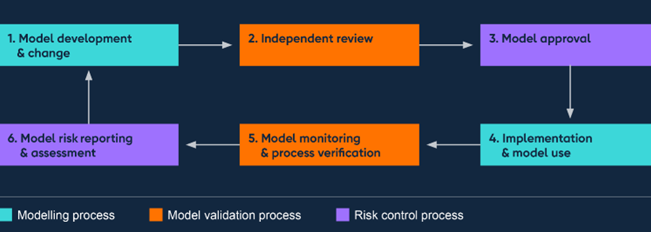

The model lifecycle can be thought of as being made up of three main processes:

- i. the core modelling process – model development, implementation, and use;

- ii. model validation – the set of activities intended to verify that models perform as expected, through:

- a) a review of the suitability and conceptual soundness of the model (independent review);

- b) verification of the integrity of implementation (process verification);

- c) ongoing testing to confirm that the model continues to perform as intended (model performance monitoring); and

- iii. model risk controls – the processes and procedures other than model validation activities to help manage, control or mitigate model risk.

- 17/05/2024

2.11

The sequence of modelling-validation-control activities describes the various stages in a model’s lifecycle.

- 17/05/2024

Diagram: the model lifecycle

- 17/05/2024

Organisational structures, validation, and control functions

2.12

Establishing the roles and responsibilities for the three main model lifecycle processes is firm-specific and depends on a firm's business model and structure of business lines. The primary roles are usually defined as follows:

- i. The model owner is the individual accountable for a model's development, implementation and use, and ensures that a model's performance is within expectation. The model owner could also be the model developer or user.

- ii. The model user(s) is the individual(s) that relies on the model's outputs as a basis for making business decisions. Model users typically identify an economic or business rationale for developing a new model and/or the need to change or modify an existing model, and may be involved in the early stages of model development and ongoing monitoring activities.

- iii. Model developer(s) is/are the team or individual(s) responsible for designing, developing, evaluating (testing), and documenting models.

- 17/05/2024

2.13

Large firms often establish a designated model risk function (MRM function) within their risk management or compliance departments. The MRM function may be separate from the model validation function, in which case they have distinct responsibilities. The MRM function is usually responsible for creating and maintaining the MRM framework and risk controls. Where firms do not establish a designated MRM function, the responsibilities for the MRM framework and risk controls are usually assigned to individuals and/or model risk committees (or a combination). Regardless of the structure of firms’ business lines, the effectiveness of MRM control functions is affected by their stature and authority, for example to restrict the use of models, recommend conditional approval, or temporarily grant exceptions to model validation or approval.

- 17/05/2024

2.14

The validation function’s primary responsibility is usually to provide an objective and unbiased opinion on the adequacy and soundness of a model for a particular use case. Validators should therefore not be part of any model development activities, nor have a stake in whether or not a model is approved. Ensuring model validators’ impartiality can be achieved in various different ways, eg having separate reporting lines and separate incentive structures from the model developers, or an independent party could review the test results to support the accuracy of the validation and thereby confirm the objectivity of the finding of the independent review.

- 17/05/2024

2.15

Regardless of the model type, risk type, or organisational structure, effective MRM practices are underpinned by strong governance and effective model lifecycle management, consisting of robust modelling, validation, and risk control processes.

- 17/05/2024

3

Overview of the principles

The MRM principles

3.1

The board of directors and senior management of firms are ultimately responsible for establishing a sound MRM framework to ensure key business decisions relevant to a firm’s safety and soundness are supported by sound and appropriate model output, and consistent with the board’s defined model risk appetite. While the scope and depth of MRM frameworks may vary across firms, certain core principles are fundamental to ensure effective MRM practices. These principles form the basis of the expectations in this supervisory statement.

- 17/05/2024

Principle 1 – Model identification and model risk classification

Firms should have an established definition of a model that sets the scope for MRM, a model inventory and a risk-based tiering approach to categorise models to help identify and manage model risk.

- 17/05/2024

Principle 2 – Governance

Firms should have strong governance oversight with a board that promotes an MRM culture from the top through setting clear model risk appetite. The board should approve the MRM policy and appoint an accountable individual to assume the responsibility to implement a sound MRM framework that will ensure effective MRM practices.

- 17/05/2024

Principle 3 – Model development, implementation, and use

Firms should have a robust model development process with standards for model design and implementation, model selection, and model performance measurement. Testing of data, model construct, assumptions, and model outcomes should be performed regularly in order to identify, monitor, record, and remediate model limitations and weaknesses.

- 17/05/2024

Principle 4 – Independent model validation

Firms should have a validation process that provides ongoing, independent, and effective challenge to model development and use. The individual or body within a firm responsible for the approval of a model should ensure that validation recommendations for remediation or redevelopment are actioned so that models are suitable for their intended purpose.

- 17/05/2024

Principle 5 – Model risk mitigants

Firms should have established policies and procedures for the use of model risk mitigants when models are under-performing, and should have procedures for the independent review of post-model adjustments.

- 17/05/2024

3.2

The MRM principles are supported by a number of sub-principles and encompass all elements of the model lifecycle. The PRA expects firms to meet the high-level model risk management principles, as well as the individual sub-principles set out in the ‘Model risk management principles for banks’ section of this SS.

- 17/05/2024

Proportionality

3.3

The MRM principles represent core risk management practices for all models and all risk types. The practical application of the principles by all firms should be commensurate with their size, business activities, and the complexity and extent of their model use. For example, for firms with a smaller number of models or less complex models, maintaining a model inventory should be less burdensome, and the criteria for classifying models into tiers can be materially simpler than for firms with a wider range of models or more complex models.

- 17/05/2024

3.4

The framework should also be applied proportionately within each firm. The rigour, intensity, prioritisation, and frequency of model validation, application of risk controls, independent review, performance monitoring and re-validation are expected to be commensurate with the associated model tier assigned to a model.

- 17/05/2024

SMF accountability for model risk management framework

3.5

The PRA considers that active senior management and board involvement in firms’ MRM governance processes are key to robust and effective MRM practices. Strengthening the accountability of firms and individuals for managing model risk should improve the engagement and participation of senior management and boards which in turn will drive a successful implementation of MRM.

- 17/05/2024

3.6

The PRA therefore expects firms to identify and allocate responsibility for the MRM framework to the relevant SMF(s) most appropriate within the firm’s organisational structure and risk profile as part of Principle 2. Firms should ensure the responsibilities in the SMF(s)’ Statement of Responsibilities are updated to reflect this.

- 17/05/2024

Financial reporting and external auditors

3.7

The expectations in this SS are also relevant to models used for financial reporting purposes. The PRA considers that the effectiveness of MRM for financial reporting is relevant to the auditor’s assessment of, and response to, the risk of material misstatement as part of the statutory audit, including its understanding of a firm’s processes for monitoring the effectiveness of its system of internal controls and its understanding of a firm’s control activities.

- 17/05/2024

3.8

The PRA expects firms to ensure a report on the effectiveness of MRM for financial reporting is available to their audit committee on a regular basis, and at least annually. To facilitate effective audit planning, the PRA expects firms to ensure that this report is available on a timely basis to inform their external auditor’s assessment of, and response to, the risk of material misstatement as part of the statutory audit.

- 17/05/2024

4

Model risk management principles for banks

Principle 1 – Model identification and model risk classification

Firms should have an established definition of a model that sets the scope for MRM, a model inventory and a risk-based tiering approach to categorise models to help identify and manage model risk.

- 17/05/2024

Principle 1.1 Model definition

A formal definition of a model sets the scope of an MRM framework and promotes consistency across business units and legal entities.

- a) Firms should adopt the following definition of a model as the basis for determining the scope of their MRM frameworks:

A model is a quantitative method, system, or approach that applies statistical, economic, financial, or mathematical theories, techniques, and assumptions to process input data into output. The definition of a model includes input data that are quantitative and / or qualitative in nature or expert judgement-based, and output that are quantitative or qualitative. - b) Notwithstanding the above definition, where material deterministic quantitative methods such as decision-based rules or algorithms that are not classified as a model have a material bearing on business decisions5 and are complex in nature, firms should consider whether to apply the relevant aspects of the MRM framework to these methods.

- c) In general, the PRA expects the implementation and use of deterministic quantitative methods not classified as models to be subject to sound and clearly documented management controls.

Footnotes

- 5. Business decisions should be understood here as all decisions made in relation to the general business and operational banking activities, strategic decisions, financial, risk, capital and liquidity measurement, reporting, and any other decisions relevant to the safety and soundness of firms.

- 17/05/2024

Principle 1.2 Model inventory

A comprehensive model inventory should be maintained to enable firms to: identify the sources of model risk; provide the management information needed for reporting model risk; and help to identify model inter-dependencies.

- a) Firms should maintain a complete and accurate set of information relevant to manage model risk for all of the models that are implemented for use, under development for implementation, or decommissioned.6

- b) While each line of business or legal entity may maintain its own inventory, firms should maintain a firm-wide model inventory which would help to identify all direct and indirect model inter-dependencies in order to get a better understanding of aggregate model risk.

- c) The types of information the model inventory should capture include:

- (i) the purpose and use of a model. For example, the relevant product or portfolio, the intended use of the model with a comparison to its actual use, and the model operating boundaries7 under which model performance is expected to be acceptable;

- (ii) model assumptions and limitations. For example, risks not captured in model and limitations in the data used to calibrate the model;

- (iii) findings from validation. For example, indicators of whether models are functioning properly, the dates when those indicators were last updated, any outstanding remediation actions; and

- (iv) governance details. For example, the names of individuals responsible for validation, the dates when validation was last performed, and the frequency of future validation.

Footnotes

- 6. The rationale for decommissioning a model could help inform or improve future generations of model development or improvements or the decommissioned model may be used as a challenger model itself.

- 7. Operating boundaries is defined here as the sample data range (including empirical variance-covariance relationships in the multivariate case) used to measure of model performance per se, extrapolating beyond a model's ‘operating boundaries’ (such as macroeconomic indices in shock or stressed economic conditions) should be assumed to involve increased model risk. estimate the parameters of a statistical model. While not a measure of model performance per se, extrapolating beyond a model's ‘operating boundaries’ (such as macroeconomic indices in shock or stressed economic conditions) should be assumed to involve increased model risk.

- 17/05/2024

Principle 1.3 Model tiering

Risk-based model tiering should be used to prioritise validation activities and other risk controls through the model lifecycle, and to identify and classify those models that pose most risk to a firm's business activities, and/or firm safety and soundness.

- a) Firms should implement a consistent, firm-wide model tiering approach that assigns a risk-based materiality and complexity rating to each of their models.

- b) Model materiality should consider both:

- (i) quantitative size-based measures. For example, exposure, book or market value, or number of customers to which a model applies; and

- (ii) qualitative factors relating to the purpose of the model and its relative importance to informing business decisions, and considering the potential impact upon the firm’s solvency and financial performance.

- c) The assessment of a model's complexity should consider the risk factors that impact a model’s inherent risk8 within each component of the modelling process, eg the nature and quality of the input data, the choice of methodology (including assumptions), the requirements and integrity of implementation, and the frequency and/or extensiveness of use of the model. Where necessary (in particular with the use of newly advanced approaches or technologies), the complexity assessment may also consider risk factors related to:

- d) The firm-wide model tiering approach should be subject to periodic validation, or other objective and critical review by an informed party to ensure the continued relevance and accuracy of model tiering. Validation or review should include: an assessment of the relevance and availability of the information used to determine model tiers; and the accurate recording and maintenance of model tiering in the model inventory.

- e) Individual model tier assignments (including materiality and complexity ratings) of models should be independently reassessed as part of the model validation and revalidation process, and should include a review of the accuracy and relevance of the information used to assign model tiers.

Footnotes

- 8. Inherent risk is the risk in the absence of any management or mitigating actions to alter either the risk’s likelihood or impact.

- 9. Data, usually unstructured and non-financial data, not traditionally used in financial modelling, including satellite imagery, telemetric or biometric data, and social-media feeds. These data are unstructured in the sense that they do not have a defined data model or pre-existing organisation.

- 10. The ease or difficulty of predicting what a model will do, ie the degree to which the cause of a decision can be understood.

- 11. Defined here as the degree to which the workings of a model can be understood in nontechnical terms.

- 12. When elements of a dataset (or as a result of model design) are more heavily weighted and/or represented than others, producing results that could have ethical and/ or social implications.

- 17/05/2024

Principle 2 – Governance

Firms should have strong governance oversight with a board that promotes an MRM culture from the top through setting clear model risk appetite. The board should approve the MRM policy and appoint an accountable individual to assume the responsibility to implement a sound MRM framework that will ensure effective MRM practices.

- 17/05/2024

Principle 2.1 Board of directors’ responsibilities

The firm-wide MRM framework should be subject to leadership from the board of directors to ensure it is effectively designed.

- a) The board of directors should establish a comprehensive firm-wide MRM framework that is part of its broader risk management framework and proportionate to its size and business activities; the complexity of its models; and the nature and extent of use of models.

- b) The framework should be designed to promote an understanding of model risk, on both an individual model basis as well as in aggregate across the firm, and should promote the management of model risk as a risk discipline in its own right. The framework should clearly define roles and responsibilities in relation to model risk across business, risk and control functions.

- c) The board should set a model risk appetite that articulates the level and types of model risk the firm is willing to accept. The model risk appetite should be proportionate to the nature and type of models used. Firms’ model risk appetite should include measures for:

- (i) effectiveness of the design and operation of the MRM framework;

- (ii) identifying models and approving their use for decision making;

- (iii) limits on model use, exceptions and overall compliance;

- (iv) thresholds for acceptable model performance and tolerance for errors; and

- (v) effectiveness of use of model risk mitigants and oversight of the use of expert judgement.

- d) The board should receive regular reports on the firms’ model risk profile against its model risk appetite. Reports should include qualitative measures describing: the effectiveness of the control framework and model use; the significant model risks arising either from individual models or in aggregate; significant changes in model performance over time; and the extent of compliance with the MRM framework.

- e) The board is expected to provide challenge to the outputs of the most material models, and to understand: the capabilities and limitations of the models, the model operating boundaries under which model performance is expected be acceptable; the potential impact of poor model performance; and the mitigants in place should model performance deteriorate.

- 17/05/2024

Principle 2.2 SMF accountability for model risk management framework

An accountable SMF should be empowered to have overall oversight to ensure the effectiveness of the MRM framework.

- a) Firms should identify a relevant SMF(s) most appropriate within the firm’s organisational structure and risk profile to assume overall responsibility for the MRM framework, its implementation, and the execution and maintenance of the framework. The relevant SMF(s) should be the most senior individual with the responsibility for the risks resulting from models operated by the firm. Firms should ensure the Statement of Responsibilities of the accountable SMF(s) reflects the specific accountability for overall MRM.

- b) The accountable SMF(s)’s responsibilities regarding MRM may include:

- (i) establishing policies and procedures to operationalise the MRM framework and ensure compliance;

- (ii) assigning the roles and responsibilities of the framework;

- (iii) ensuring effective challenge;

- (iv) ensuring independent validation;

- (v) evaluating and reviewing model results and validation and internal audit reports;

- (vi) taking prompt remedial action when necessary to ensure the firm’s aggregate model risk remains within the board approved risk appetite; and

- (vii) ensuring sufficient resourcing, adequate systems, and infrastructure to ensure data and system integrity, and effective controls and testing of model outputs to support effective MRM practices.

- c) Consistent with other risk disciplines, the identification of a relevant SMF(s) with overall responsibility for MRM does not prejudice the respective responsibilities of business, risk and control functions in relation to the development and use of individual models. Model owners, model developers, and model users remain responsible for ensuring that individual models are developed, implemented, and used in accordance with the firm’s MRM framework, model risk appetite, and limitations of use.

- 17/05/2024

Principle 2.3 Policies and procedures

Firms should have comprehensive policies and procedures that formalise the MRM framework and ensure effective and consistent application across the firm.

- a) Firms should have clearly documented policies and procedures that formalise the MRM framework, and support its effective implementation. Firm-wide policies should be approved by the board and reviewed on a regular basis to ensure their continued relevance for: the firms’ model risk appetite and profile; the economic and business environment and the regulatory landscape the firm operates in; and new and advancing technologies the firm is exposed to.

- b) Policies should cross-reference and align with other relevant parts of their broader risk management policies, and align with the expectations set out in this supervisory statement. Compliance with internal policies and applicable regulatory requirements / expectations should be assessed and reported to the board of directors on a regular basis.

- c) Firms should establish policies and procedures across all aspects of the model lifecycle to ensure that models are suitable for their proposed usage at the outset and on an ongoing basis and to enable model risks to be identified and addressed on a timely basis. At a minimum, the policies and procedures should cover:

- (i) the definitions of a model and model risk, and any external taxonomies used to support the model identification process;

- (ii) the model tiering approach, including: the data sources used in the model tiering approach; how model tiers are used to determine the scope, intensity and frequency of model validation; the roles and responsibilities for assessing model materiality and complexity; the frequency with which model tiering is re-performed; and the process for the approval of model tiering;

- (iii) standards for model development, including: model testing procedures; model selection criteria; documentation standards; model performance assessment criteria and thresholds; and supporting system controls;

- (iv) data quality management procedures, including: the rules and standards for data quality, accuracy and relevance; and the specific risk controls and criteria applicable to reflect the higher level of uncertainty associated with use of alternative or unstructured data, or information sources;

- (v) standards for model validation, including: clear roles and responsibilities; the validation procedures performed; how to determine prioritisation, scope, and frequency of re-validation; processes for effective challenge and monitoring of the effectiveness of the validation process; and reporting of validation results and any remedial actions;

- (vi) standards for measuring and monitoring model performance, including: the criteria to be used to measure model performance; the thresholds for acceptable model performance; criteria to be used to determine whether model recalibration or redevelopment is required when model performance thresholds are breached; processes for conducting root cause analyses to identify model limitations and systemic causes of performance deterioration processes for performing and use of back-testing;

- (vii) the key model risk mitigants, including: the use of model adjustments and overrides to reflect use of expert-judgement; processes for restricting, prohibiting, or limiting a model’s use; how model validation exceptions are escalated;

- (viii) the model approval process and model change, including clear roles and responsibilities of dedicated model approval authorities, ie committee(s) and/or individual(s), the governance, validation, independent review, approval and monitoring procedures that need to be followed when a material change is made to a model; the materiality criteria to be used to identify the potential impact of prospective model changes.

- d) The SMF(s) with overall responsibility for the MRM framework should ensure the adequacy of board-level policies for material and complex model types is assessed and supplemented with more detailed model or risk specific procedures as necessary to deliver the firms overall risk appetite, including bespoke:

- (i) standards for model development, independent model validation and procedures for monitoring the performance of material and complex model types where the control processes associated with these models substantially differ from other model types; and

- (ii) data quality procedures for data intensive model types to set clear roles and responsibilities for the management of the quality of data used for model development.

- 17/05/2024

Principle 2.4 Roles and responsibilities

Roles and responsibilities should be allocated to staff with appropriate skills and experience to ensure the MRM framework operates effectively.

- a) Firms should clearly document the roles and responsibilities for each stage of the model lifecycle together with the requisite skills, experience and expertise required for the roles, and the degree of organisational independence and seniority required to perform the role effectively.

- b) Responsibility for model performance monitoring and the reassessment of already implemented models should be clearly defined and may be undertaken by model owners, users, or developers. The adequacy of model performance monitoring is a key area of consideration by model validators.

- c) Model owners should be documented for all models. Model owners are accountable for ensuring that:

- (i) the model's performance is monitored against the firm’s board-approved risk appetite for acceptable model performance;

- (ii) the model is assigned to the correct model tier; has undergone appropriate validation in accordance with the model tier; and providing all necessary information to enable validation activities to take place;

- (iii) the model is recorded in the model inventory and information about the model is accurate and up-to-date.

- d) Model users should be documented for all models. Model users are accountable for ensuring that:

- (i) the model use is consistent with the intended purpose;

- (ii) known model limitations are taken into consideration when the output of the model is used.

- e) Model developer(s) should be documented for all models. Model developers are accountable for ensuring that the research, development, evaluation, and testing of a model (including testing procedures, model selection, and documentation) are conducted according to firms’ own standards.

- f) Model validation should be performed by staff:

- (i) that have the requisite technical expertise and sufficient familiarity with the line of business using the model, to be able to provide an objective, unbiased and critical opinion on the suitability and soundness of models for their intended use;

- (ii) that have the necessary organisational standing and incentives to report model limitations and escalate material control exceptions and/or inappropriate model use in a prompt and timely manner.

- 17/05/2024

Principle 2.5 Internal Audit

- a) Internal Audit (IA) should periodically assess both the effectiveness of the MRM framework over each component of the model lifecycle, as well as the overall effectiveness of the MRM framework and compliance with internal policies. The findings of IA’s assessments should be documented and reported to the board and relevant committees on a timely basis.

- (b) The IA review should independently verify that:

- (i) internal policies and procedures are comprehensive to enable model risks to be identified and adequately managed;

- (ii) risk controls and validation activities are adequate for the level of model risks;

- (iii) validation staff have the necessary experience, expertise, organisational standing, and incentives to provide an objective, unbiased, and critical opinion on the suitability and soundness of models for their intended use and to report model limitations and escalate material control exceptions and/or inappropriate model use in a prompt and timely manner; and

- (iv) model owners and model risk control functions comply with internal policies and procedures for MRM, and those internal policies and procedures are in line with the expectations set out in this SS.

- 17/05/2024

Principle 2.6 Use of externally developed models, third-party vendor products

- a) In line with PRA SS2/21 – Outsourcing and third party risk management13 boards and senior management are ultimately responsible for the management of model risk, even when they enter into an outsourcing or third-party arrangement.

- b) Regarding third-party vendor models, firms should:

- (i) satisfy themselves that the vendor models have been validated to the same standards as their own internal MRM expectations;

- (ii) verify the relevance of vendor supplied data and their assumptions; and

- (iii) validate their own use of vendor products and conduct ongoing monitoring and outcomes analysis of vendor model performance using their own outcomes.

- c) Subsidiaries using models developed by their parent-group14 may leverage the outcome of the parent-group’s validation of the model if they can:

- (i) demonstrate that the parent-group has implemented an MRM framework and model development and validation standards in line with the expectations set out in this SS;

- (ii) verify the relevance of the data and assumptions for the intended application of the model by the subsidiary; and

- (iii) ensure the intensity and rigour of model validation is adequate for the model tier classification determined relative to the risk profile of the subsidiary on a standalone basis.15

Footnotes

- 13. March 2021: https://www.bankofengland.co.uk/prudential-regulation/publication/2021/march/outsourcing-and-third-party-risk-management-ss.

- 14. UK group and/or non-ring-fenced bodies for UK ring-fenced bodies.

- 15. Materiality of a model relative to the risk profile and models used by a group could be assessed as different from the materiality of the same model relative to the risk profile and models used by a subsidiary.

- 17/05/2024

Principle 3 – Model development, implementation, and use

Firms should have a robust model development process with standards for model design and implementation, model selection, and model performance measurement. Testing of data, model construct, assumptions, and model outcomes should be performed regularly in order to identify, monitor, record, and remediate model limitations and weaknesses.

- 17/05/2024

Principle 3.1 Model purpose and design

- a) All models should have a clear statement of purpose and design objective(s)16 to guide the model development process. The design of the model should be suitable for the intended use, the choice of variables and parameters should be conceptually sound and support the design objectives, the calculation parameter estimates and mathematical theory should be correct, and the underlying assumptions of the model should be reasonable and valid.

- b) The choice of modelling technique should be conceptually sound and supported by published research, where available, or generally accepted industry practice where appropriate. The output of the model should be compared with the outcomes of alternative theories or approaches and benchmarks, where possible.

- c) Particular emphasis should be placed on understanding and communicating to model users and other stakeholders the merits and limitations of a model under different conditions and the sensitivities of model output to changes in inputs.

Footnotes

- 16. The design objective(s) represent model performance target metrics such as measures for robustness, stability, and accuracy for accounting provisions or pricing models, discriminatory power for rating systems, and may represent a certain degree of predetermined conservatism for capital adequacy measures.

- 17/05/2024

Principle 3.2 The use of data

- a) The model development process should demonstrate that the data used to develop the model are suitable for the intended use; and are consistent with the chosen theory and methodology; are representative of the underlying portfolios, products, assets, or customer base the model is intended to be used for.

- b) The model development process should ensure there is no inappropriate bias in the data used to develop the model, and that usage of the data is compliant with data privacy and other relevant data regulations.

- c) When the data used to develop the model are not representative of a firm’s underlying portfolios, products, assets, or customer base that the model is intended to be used for, the potential impact should be assessed, and the potential limitation should be taken into account in the model’s tier classification to reflect the higher model uncertainty. Model users and model owners should be made aware of any model limitations.

- d) Any adjustments made to the data used to develop the model or use of proxies to compensate for the lack of representative data should be clearly documented and subject to validation. The assumptions made, factors used to adjust the data, and rationale for the adjustment should be independently validated, monitored, reported, analysed, recorded in the model inventory, and documented as part the model development process.

- e) Interconnected data sources and the use of alternative and unstructured data17 should be identified and recorded in the model inventory, and the complexity introduced by interconnected data and increased uncertainty of alternative and unstructured data should reflect in the model’s tier classification to ensure the appropriate level of rigour and scrutiny is applied in the independent validation activities of the model.

Footnotes

- 17. Data, usually unstructured and non-financial data, not typically used in financial modelling such as social media feeds. The data are unstructured in the sense that they do not have a defined data model or pre-existing structure.

- 17/05/2024

Principle 3.3 Model development testing

- a) Model development testing should demonstrate that a model works as intended. It should include clear criteria (tests) as a basis to measure a model’s quality (performance in development stage) and to select between candidate models. Model developers should provide the key outline of the monitoring pack (set of tests or criteria) that will be used to monitor a model’s ongoing performance during use.

- b) Model development testing should assess model performance against the model’s design objective(s) using a range of performance tests.

- (i) From a backward-looking perspective, performance tests should be conducted using actual observations across a variety of economic and market conditions that are relevant for the model’s intended use.

- (ii) From a forward-looking perspective, performance tests should be conducted using plausible scenarios that assess the extent to which the model can take into consideration changes in economic and market conditions, as well as changes to the portfolio, products, assets, or customer base, without model performance deteriorating below acceptable levels. This should include sensitivity analysis18 to determine the model operating boundaries under which model performance is expected to be acceptable.

- (iii) Where practicable, performance tests should also include comparisons of the model output with the output of available challenger models, which are alternative implementations of the same theory, or implementations of alternative theories and assumptions. The extent to which comparisons against challenger models or other benchmarks have been conducted should be considered in the model’s tier classification to reflect the higher model uncertainty.

- c) Model development testing should also be conducted for material model changes, including material changes over a period of time in dynamic models (ie models able to adapt, recalibrate, or otherwise change autonomously in response to new inputs19), and should include a comparison of the model output prior to the change and the corresponding output following the change to actual observations and outcomes (ie parallel outcomes analysis).

Footnotes

- 18. Evaluating a model's output over a range of input values or parameters.

- 19. Including where parameters and/or hyperparameters are automatically recalculated over time.

- 17/05/2024

Principle 3.4 Model adjustments and expert judgement

- a) Firms should be able to demonstrate that risks relating to model limitations and model uncertainties20 are adequately understood, monitored, and managed, including through the use of expert judgement.

- b) The model development process should consider the need to use expert judgement to make model adjustments that modify any part of a model (input, assumptions, methodology, or output) to address model limitations.

- c) Where the model development process identifies a need for such model adjustments, the adjustments should be adequately justified and clearly recorded in the model inventory. The model inventory should record the decisions taken relating to the reasons for model adjustments, and how the adjustments should be calculated over time. The implementation of those decisions should be appropriately documented and subject to proper governance, including ongoing independent validation.

- d) Where such adjustments are made either to a feeder model whose output is the input of another model or to a sub-model of a system of models, the impact of those adjustments on related models should also be assessed and the relevant model owners and users should be made aware of the potential impact of those adjustments. Where the adjustment is material, it should be the subject of the independent validation process of both models.

- e) Where firms use conservatism to address model uncertainties, it should be intuitive from a business and economic perspective, adequately justified and supported by appropriate documentation, and should be consistent with the model’s design objectives.

- f) Model owners or developers should be able to demonstrate a clear link between model limitations and the reasons for model adjustments, and be responsible for developing and implementing clear remediation plans to address the model limitations.

- g) Firms should have a process to consider whether the materiality of model adjustments or a trend of use of recurring model adjustments for the same model limitations are indicative of flawed model design or misspecification in the model construct, and consider the need for remedial actions to the extent of model recalibration or redevelopment.

Footnotes

- 20. Model uncertainty should be understood as the inherent uncertainty in the parameter estimates and results of statistical models, including the uncertainty in the results due to model choices or model misuse.

- 17/05/2024

Principle 3.5 Model development documentation

- a) Firms should have comprehensive, and up-to-date documentation on the design, theory, and logic underlying the development of their models. Model development documentation should be sufficiently detailed so that an independent third party with the relevant expertise would be able to understand how the model operates, to identify the key model assumptions and limitations, and to replicate any parameter estimation and model results. Firms should ensure the level of detail in the documentation of third-party vendor models is sufficient to validate the firm’s use of the model.

- b) The model documentation should include:

- (i) the use of data: a description of the data sources, any data proxies, and the results of data quality, accuracy, and relevance tests;

- (ii) the choice of methodology: the modelling techniques adopted and assumptions or approximations made, details of the processing components that implement the theory, mathematical specification, numerical and statistical techniques;

- (iii) performance testing: details of the tests or criteria that will be used to monitor the model’s ongoing performance during use, and the rationale for the choice of tests or criteria selected; and

- (iv) model limitations and use of expert judgement: the nature and extent of model limitations, justification for using any model adjustments to address for model limitations, and how those adjustments should be calculated over time.

- 17/05/2024

Principle 3.6 Supporting systems

- a) Models should be implemented in: information systems or environments that have been thoroughly tested for the intended model purposes and/or the systems for which models have been validated and approved. The systems should be subject to rigorous quality control and change control processes. The findings of any system and/or implementation tests should be documented.

- b) Firms should periodically reassess the suitability of the systems for the model purposes and take appropriate remedial action as needed to ensure suitability.

- 17/05/2024

Principle 4 – Independent model validation

Firms should have a validation process that provides ongoing, independent, and effective challenge to model development and use. The individual/body within a firm responsible for the approval of a model should ensure that validation recommendations for remediation or redevelopment are actioned so that models are suitable for their intended purpose.

- 17/05/2024

Principle 4.1 The independent validation function

- a) Firms should have a validation function to provide an objective, unbiased, and critical opinion on: the suitability and soundness of models for their intended use; the design and integration of the system supporting the model; the accuracy, relevance and completeness of the development data; and the output and reports used to inform decisions.

- b) The validation function should be responsible for the (i) independent review and (ii) the periodic re-validation of models, and should provide their recommendations on model approvals to the appropriate model approval authority.

- c) While model owners are responsible for model performance, model users, owners, and validators share the responsibility for (i) ongoing model performance monitoring and (ii) process verification.

- d) The validation function should operate independently from the model development process and from model owners. Firms that have approval to use internal models for the purposes of calculating their capital requirements are expected to demonstrate independence through separate reporting lines for model validators and model developers and owners, applicable across the MRM framework.

- e) The validation function should have sufficient organisational standing to provide effective challenge and have appropriate access to the board and/or board committees to escalate concerns around model usage and MRM in a prompt and timely manner.

- 17/05/2024

Principle 4.2 Independent review

- a) All models should be subject to an independent review to ensure that models are suitable for their intended use. The independent review should:

- (i) cover all model components, including model inputs, calculations, and reporting outputs;

- (ii) assess the conceptual soundness of the underlying theory of the model, and the suitability of the model for its intended use;

- (iii) critically analyse the quality and extent of model development evidence, including the relevance and completeness of the data used to develop the model with respect to the underlying portfolios, products, assets, or customer base the model is intended to be used for;

- (iv) evaluate qualitative information and judgements used in model development, and ensure those judgements have been conducted in an appropriate and systematic manner, and are supported by appropriate documentation; and

- (v) conduct additional testing and analysis as necessary to enable potential model limitations to be identified and addressed on a timely basis, and to review the developmental evidence of the sensitivity analysis conducted by model developers to confirm the impact of key assumptions made in the development process and choice of variables made during the model selection stage on the model outputs.

- b) The nature and extent of independent review should be determined by the model tier and, where the validation regards a model change, commensurate to the materiality of the model change.

- 17/05/2024

Principle 4.3 Process verification

- a) Firms should conduct appropriate verification of model processes and systems implementation to confirm that all model components are operating effectively and are implemented as intended. This should include verification that:

- (i) model inputs – internal or external data used as model inputs are representative of the underlying portfolios, products, assets or customer base the model is intended to be used for, and compliant with internal data quality control and reliability standards;

- (ii) calculations – systems implementation (code), integration (processing), and user developed applications are accurate, controlled and auditable; and

- (iii) reporting outputs – reports derived from model outputs are accurate, complete, informative, and appropriately designed for their intended use.

- 17/05/2024

Principle 4.4 Model performance monitoring

- a) Model performance monitoring should be performed to assess model performance against thresholds for acceptable model performance based on the model testing criteria used during the model development stage.

- b) Firms should conduct ongoing model performance monitoring to:

- (i) ensure that parameter estimates and model constructs are appropriate and valid;

- (ii) ensure that assumptions are applicable for the model’s intended use;

- (iii) assess whether changes in products, exposures, activities, clients, or market conditions should be addressed through model adjustments, recalibration, or redevelopment, or by the model being replaced; and

- (iv) assess whether the model has been used beyond the intended use and whether this use has delivered acceptable results.

- c) A range of tests should form part of model monitoring, including those determined by model developers:

- (i) benchmarking – comparing model estimates with comparable but alternative estimates;

- (ii) sensitivity testing – reaffirming the robustness and stability of the model;

- (iii) analysis of overrides – evaluate and analyse the performance of model adjustments made;

- (iv) parallel outcomes analysis – assessing whether new data should be included in model calibration.

- d) Model monitoring should be conducted on an ongoing basis with a frequency determined by the model tier.

- e) Firms should produce timely and accurate model performance monitoring reports that should be independently reviewed, and the results incorporated into the procedures for measuring model performance (as per Principle 2.3(c)(vi)).

- 17/05/2024

Principle 4.5 Periodic revalidation

- a) Firms should undertake regular independent revalidation of models (typically less detailed than the validation applied during initial model development) to determine whether the model has operated as intended, and whether the previous validation findings remain valid, should be updated, or whether validation should be repeated or augmented with additional analysis.

- b) The periodic revalidation should be carried out with a frequency that is consistent with the model tier.

- 17/05/2024

Principle 5 – Model risk mitigants

Firms should have established policies and procedures for the use of model risk mitigants when models are under-performing, and should have procedures for the independent review of post-model adjustments.

- 17/05/2024

Principle 5.1 Process for applying post-model adjustments21

- a) Firms should have a consistent firm-wide process for applying post-model adjustments (PMAs) to address model limitations where risks and uncertainties are not adequately reflected in models or addressed as part of the model development process. The process should be documented in firms’ policies and procedures, and should include a governance and control framework for reviewing and supporting the use of PMAs, the implementation of decisions relating to how PMAs should be calculated, their completeness, and when PMAs should be reduced or removed.

- b) The processes for applying PMAs may vary across model types but the intended outcomes of each process should be similar for all model types and focused on ensuring that there is a clear rationale for the use of PMAs to compensate for model limitations, and that the approach for applying PMAs is suitable for their intended use.

- c) PMAs for material models or portfolios should be documented, supported by senior management, and approved by the appropriate level of authority (eg senior management, risk committee, audit committee).

- d) PMAs should be applied in a systematic and transparent manner. The impact of applying PMAs should be made clear when model results are reported for use in decision making with model results being presented with and without PMAs.

- e) All PMAs should be subject to an independent review with intensity commensurate to the materiality of the PMAs. As a minimum, the scope of review should include:

- (i) an assessment of the continued relevance of PMAs to the underlying portfolio;

- (ii) qualitative reasoning22 – to ensure the underlying assumptions are relevant, the soundness of the underlying reasoning, and to ensure both are logically and conceptually sound from a business perspective;

- (iii) inputs – to ensure the integrity of data used to calculate the PMA, and to ensure that the data used is representative of the underlying portfolio;

- (iv) outputs – to ensure the outputs are plausible; and

- (v) root cause analysis – to ensure a clear understanding of the underlying model limitations, and whether they are due to significant model deficiencies that require remediation.

- f) PMAs should be supported by appropriate documentation. As a minimum, documentation should include:

- (i) a clear justification for applying PMAs;

- (ii) the criteria to determine how PMAs should be calculated and how to determine when PMAs should be reduced or removed;

- (iii) triggers for prolonged use of PMAs to activate validation and remediation;

- g) Firms should have a process to consider whether the materiality of PMAs, or a trend of use of recurring PMAs for the same model limitations are indicative of flawed model design, or misspecification in the model construct, and consider the need for remedial actions to the extent of model recalibration or redevelopment to remediate underlying model limitations and reduce reliance on PMAs.

Footnotes

- 21. Post-model adjustments (PMAs) will refer to all model overlays, management overlays, model overrides, or any other adjustments made to model output where risks and uncertainties are not adequately reflected in existing models.

- 22. Expert judgement will make use of more qualitative and expert reasoning to arrive at an estimate due to the lack of empirical evidence to use as basis for a quantitative calculation to produce the estimate.

- 17/05/2024

Principle 5.2 Restrictions on model use

- a) Firms should consider placing restrictions on model use when significant model deficiencies and/or errors are identified during the validation process, or if model performance tests show a significant breach has or is likely to occur, including:

- (i) permitting the use of the model only under strict controls or mitigants; and

- (ii) placing limits on the model’s use including prohibiting the model to be used for specific purposes.

- b) The process of managing significant model deficiencies, or inadequate model performance should be adequately documented and reported to key stakeholders (model owners, users, validation staff, and senior management), including recording the nature of the issues and tracking the status of remediation in the model inventory.

- 17/05/2024

Principle 5.3 Exceptions and escalations

- a) For material models, firms should formulate the exceptions23 they would allow for model use and model performance, and should implement formally approved policies and procedures setting out the escalation procedures to be followed and to manage these exceptions.

- (i) Exceptions for model use should be temporary, should be subject to post-model adjustments (PMAs), should be reported to and supported by stakeholders and senior management.

- (ii) For model performance exceptions, firms should have clear guidelines for determining a maximum tolerance on model performance exceptions (deviation from expectation), should be subject to appropriate risk controls (eg the use of alternative models, heightened review and challenge, and more frequent monitoring post-model adjustments) and mitigants (eg recalibrating or redevelopment of existing methodology) once defined triggers and thresholds are breached.

- b) Firms should have escalation processes in place so that the key stakeholders (model owners, users, validation staff, and senior management) are promptly made aware of a model exception.

Footnotes

- 23. Exceptions are defined here as using a model when not approved for usage by the appropriate oversight entity or not validated for use; a model is used outside its intended purpose; a model that displays persistent breach of performance metrics continues to be used; or back testing suggests the model results are inconsistent with actual outcomes.

- 17/05/2024